The leader of the group Human-Centered Data Analytics: Laura Hollink

Human-Centered Data Analytics

The HCDA group focuses on exploring human-centered, responsible AI in culture and media to ensure inclusive digital systems that promote diversity and combat misinformation.

We investigate human-centered, responsible AI in the culture and media sectors.

How can we ensure that digital systems are inclusive, promote diversity and can be used to combat misinformation? The HCDA group addresses these important questions. Our work includes a wide range of techniques, such as statistical AI (machine learning), symbolic AI (knowledge graphs, reasoning), and human computation (crowdsourcing). By analyzing empirical evidence of human interactions with data and systems, we derive insights into the impact of design and implementation choices on users.

We maintain close collaborations with professionals from the culture and media sectors, as well as social scientists and humanities scholars, through the Cultural AI Lab and the AI, Media and Democracy Lab. These interdisciplinary labs provide us with opportunities to work with real data and real-world use cases.

Examples of recent research topics: measuring bias and diversity in recommender systems; examining biased (colonial) terminology in knowledge graphs; developing transparent techniques for misinformation detection.

Publications

All publicationsCourses

-

The Social Web(30 Oct 2024 - 15 Dec 2024)

-

Meaningful (Linked) Data Interaction(15 Nov 2023 - 4 Feb 2024)

-

The Social Web(1 Nov 2023 - 15 Dec 2023)

Current projects with external funding

- Culturally aware AI (AI:CULT)

- HAICu: Digital Humanities - Artificail Intelligence - Cultural heritage (HAICu)

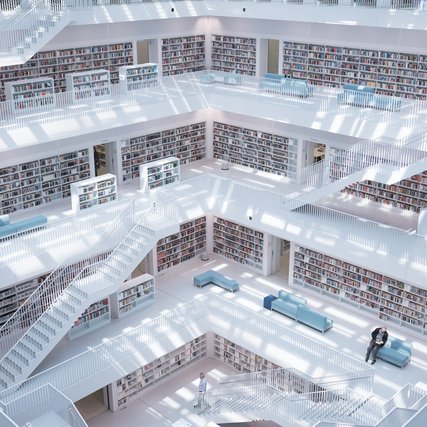

- Responsible Recommenders in the Public Library (PPS Koninklijke Bibliotheek)

- The eye of the beholder: Transparent pipelines for assessing online information quality (The eye of the beholder)