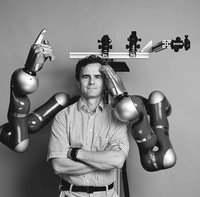

Jan Peters is a full professor (W3) for Intelligent Autonomous Systems at the Computer Science Department of the Technische Universitaet Darmstadt since 2011, and, at the same time, he is the dept head of the research department on Systems AI for Robot Learning (SAIROL) at the German Research Center for Artificial Intelligence (Deutsches Forschungszentrum für Künstliche Intelligenz, DFKI) since 2022. He is also is a founding research faculty member of the Hessian Center for Artificial Intelligence. Jan Peters has received the Dick Volz Best 2007 US PhD Thesis Runner-Up Award, the Robotics: Science & Systems - Early Career Spotlight, the INNS Young Investigator Award, and the IEEE Robotics & Automation Society's Early Career Award as well as numerous best paper awards. In 2015, he received an ERC Starting Grant and in 2019, he was appointed IEEE Fellow, in 2020 ELLIS fellow and in 2021 AAIA fellow.

Despite being a faculty member at TU Darmstadt only since 2011, Jan Peters has already nurtured a series of outstanding young researchers into successful careers. These include two dozen new faculty members at leading universities in the USA, Japan, Germany, Canada, Finland, Vietnamn and Holland, postdoctoral scholars at top computer science departments (including MIT, CMU, and Berkeley) and young leaders at top AI companies (including Amazon, Boston Dynamics, Google and Facebook/Meta). Two of his graduates have received the Best European Robotics PhD Thesis Award and three further graduates have been runner up for this award.

Jan Peters has studied Computer Science, Electrical, Mechanical and Control Engineering at TU Munich and FernUni Hagen in Germany, at the National University of Singapore (NUS) and the University of Southern California (USC) in Los Angeles. He has received four Master's degrees in these disciplines as well as a Computer Science PhD from USC. Jan Peters has performed research in Germany at DLR, TU Munich and the Max Planck Institute for Biological Cybernetics (in addition to the institutions above), in Japan at the Advanced Telecommunication Research Center (ATR), at USC and at both NUS and Siemens Advanced Engineering in Singapore. He has led research groups on Machine Learning for Robotics at the Max Planck Institutes for Biological Cybernetics (2007-2010) and Intelligent Systems (2010-2021).

Talk details

Inductive Biases for Robot Reinforcement Learning

The quest for intelligent robots capable of learning complex behaviors from limited data hinges critically on the design and integration of inductive biases—structured assumptions that guide learning and generalization. In this talk, Jan Peters explores the foundational role of inductive biases in robot learning, drawing from insights in control theory, neuroscience, and machine learning. He discusses how exploiting physical principles, modular control structures, symmetry, temporal abstraction, and domain-specific priors can drastically reduce sample complexity and improve robustness in robotic systems.

Through a series of concrete examples—including robot table tennis, tactile manipulation, quadruped locomotion, and dynamic motor skill learning on anthropomorphic arms—Peters illustrates how inductive biases enable efficient policy search, reinforcement learning, and imitation learning. These applications demonstrate how embedding prior knowledge about motor primitives, control hierarchies, or contact dynamics helps robots acquire versatile skills with minimal data. The talk concludes with a vision for future robot learning systems that integrate such structured biases with modern data-driven methods, enabling scalable, adaptive, and generalizable autonomy in real-world environments.